A year or so ago, I was looking into ways to improve releases at my current job. We have a whole bunch of small legacy .NET applications that until 3 years ago were manually be deployed into a server that was provided to us by a third party. After migrating things to Azure, the sky was the limit for us. It was time to take control of our releases and make it better.

Most of the new ones are .NET core based and are now being developed with TDD and other good practices.

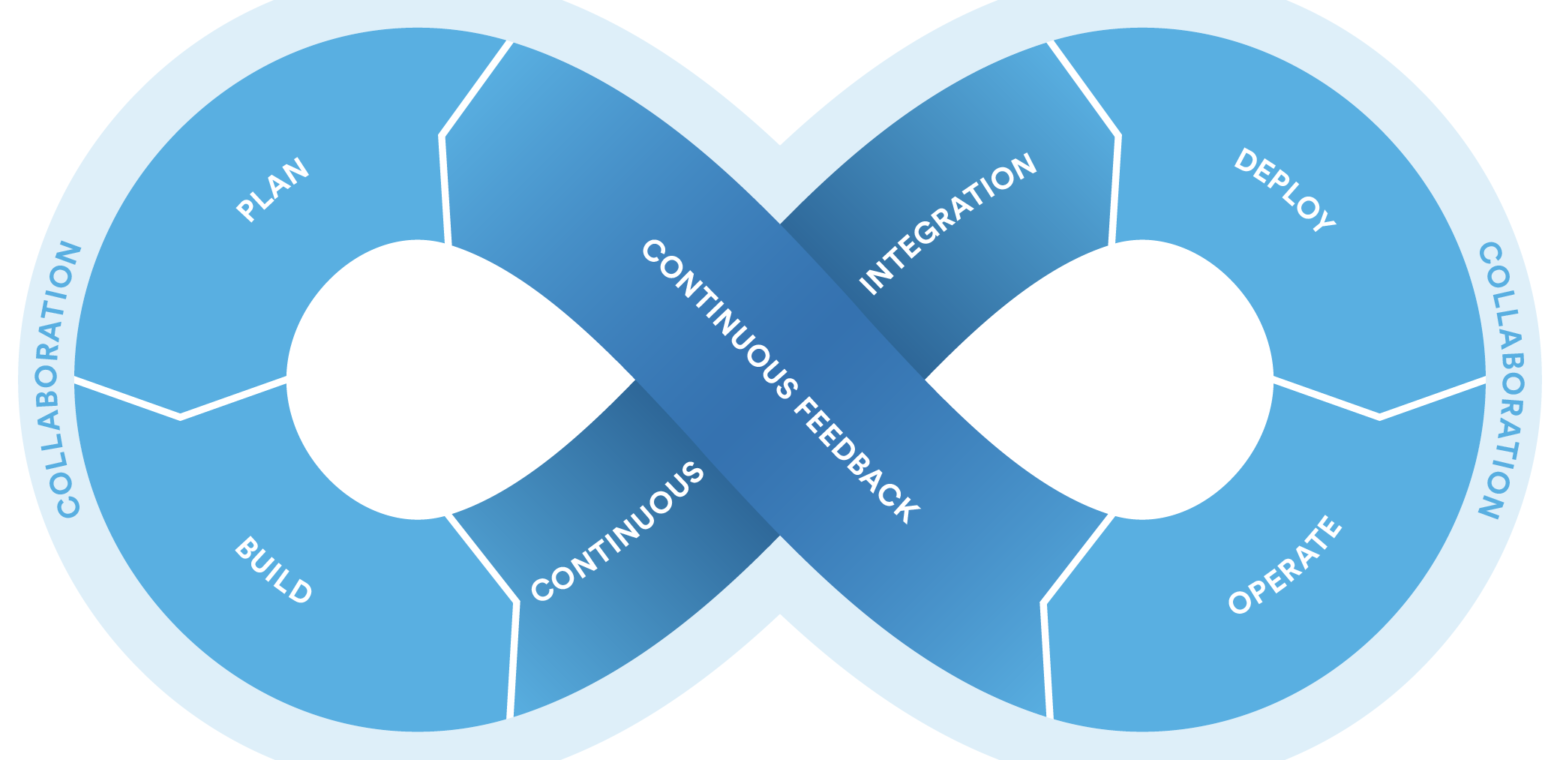

The idea was to get to a place where we can have continuous integration. Then once we have continuous integration, we can think of continuous delivery and deployment.

I know these words are thrown so often in meetings without getting into the details, so you probably think, I’m doing that to you now. I understand the pain. I will describe this in simple words.

By the way, I was recently asked about deployment strategies that I use at work by a friend who was boasting of the continuous deployment strategies used at his firm. So I had to recollect all that I read. So I thought why not write about this.

Let us get into exploring some of the details.

What is DevOps

I bet you have already noticed job descriptions requiring a DevOps Engineer. What on earth is this engineer? This job title is the result of a misunderstanding of DevOps.

By the I also have to be clear, there are companies that understand what DevOps is and choose to have DevOps engineer as a role, to put the emphasis on the fact that such an engineer, must have the skills necessary to do both development and operations. A bit of a developer, a bit of sysadmin. A new breed. Actually, there are plenty of engineers who fall into this criteria if you ask me. They may not have the official title just yet but that is only down to the organisation they work for.

But I digress. So let us get back to the topic.

The idea is to break silos and work together - collaborate early on and produce better applications/products.

If you are a software engineer today, you probably already work, in a DevOps environment. If your team is responsible for developing, testing and deploying your own code with the help of platform team and maybe security experts, then you are mostly likely already in a good place.

The word, DevOps, as you might have guessed already, is a portmanteau of two words: Development and Operations.

The reason this became a big game-changer is because once upon a time, when I started my career as a software engineer, I used to work in a team in Mumbai, developing PL/SQL packages and then handover the stuff to a Quality Assurance team in Pune. Once the code is approved by QA, it is then passed on to the application support or operations team in United Kingdom - I don’t remember exactly where in the United Kingdom, who were then responsible for deploying it to the production servers of our then client, British Telecom. This was kind of the norm in most large organisations.

An example of how things used to be

As a developer I could forget to mention something in the release notes and then the guys responsible for deployment will suffer and will have to call me up on my phone at the oddest of times to understand how to deploy the application and it has happened.

Now that the problem is clear, let us move on to understand what the continuous x, y and z are.

What is Continuous integration?

Continuous integration is the practice of automating the integration of code changes with automated tests from multiple contributors into a single software project. This integration can happen multiple times a day or multiple times a week, without ever having to go through a painful merge-hell. This process is considered a DevOps best practice!

Why is this is so cool?

It is like, let us say your IDE is fast and slick and runs tests really quick and lets know if they fail or pass. Compare that to an IDE that is sluggish and takes at least 10 minutes to run tests. Which one would you prefer? You prefer to know what’s broken as soon as possible because you are most likely to remember what might have broken right then and there.

One of the key tools required to enable continuous integration is a version control management system, without which you would not have a clue which version of the code is the main one that gets deployed. Then built around the version control management system are other tools that enable automated code quality checks, with the help of static/dynamic code analysis and then automated unit tests and integration tests.

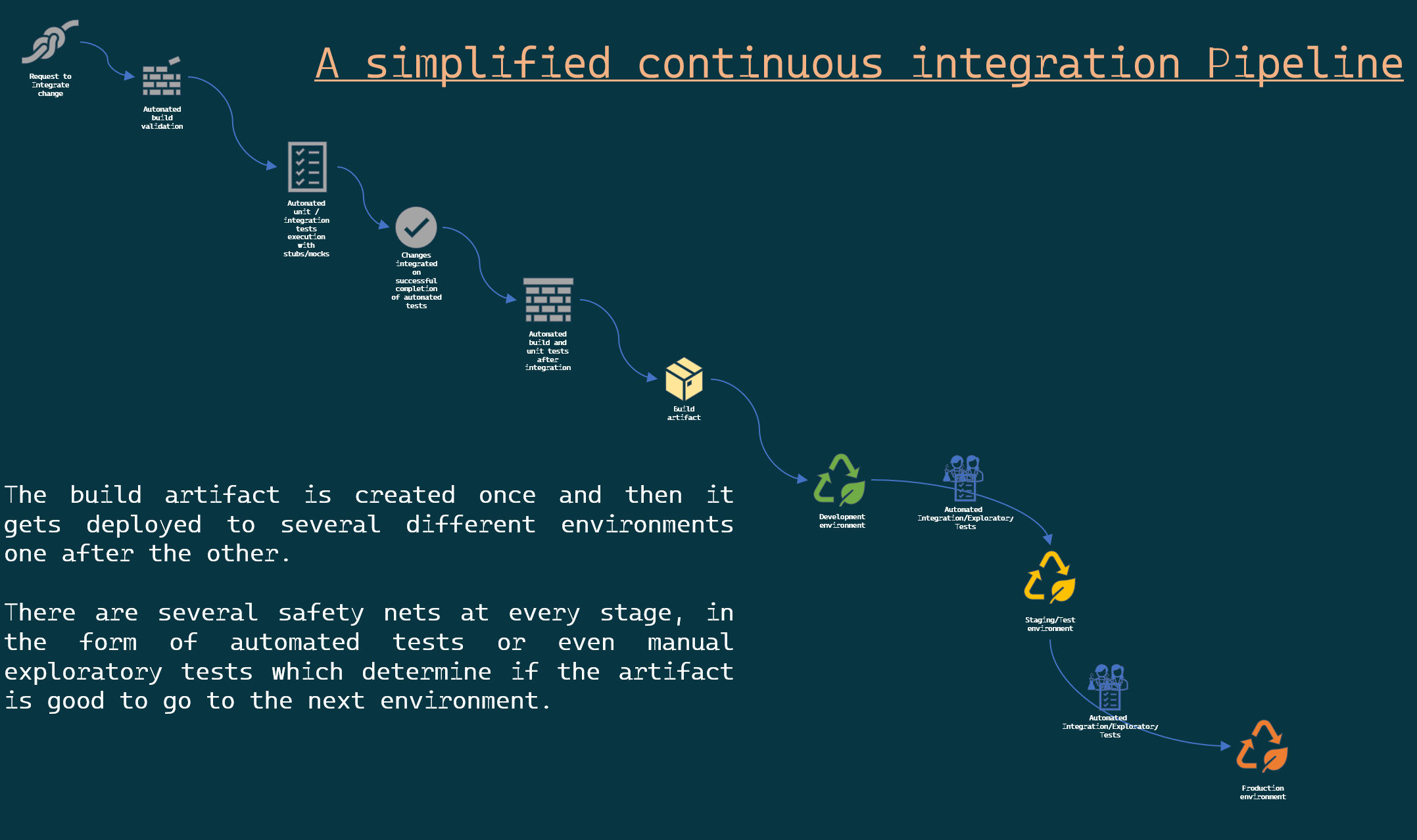

That is the power of Continuous Integration! Let us take a look at how it would look like:

Why do companies still not do this?

Well, it is not easy to adopt continuous integration in an application with little or no automated tests. In order to trust an application, you need to have tests. Generally, in the beginning these are done manually. And good developers, will write unit tests. As integration tests can be relatively harder to write, developers may not even invest their time developing an integration testing suite for this. Thus you cannot trust your code changes, unless someone actually tests the application end to end!

This is the biggest obstacle to Continuous integration. To adopt automated integration with confidence, you need to have automated tests that guarantee that confidence. Tools do not guarantee that confidence. Developers have to create the tests that ensure that confidence. For developers to do so, the product owners or business owners or those who need the working software, must invest in the additional time required to develop these automated safety-nets we affectionately call unit and integration tests.

When a software development team, does not consider these safety-nets as part of the development process, the problem begins. Estimates for delivery are then given without accounting for the work involved in developing these. These estimates are then communicated to the clients. Thus automated safety-nets are perceived as additional work when in reality it is part of the work. The longer this goes on, the bigger the gap in automated test coverage! The result is difficulty in adopting continuous integration.

This is what I have seen happen in the places where I have worked. And I am sure there are other reasons but I am here to share my experience.

Continuous Delivery

Continuous Delivery is also mostly abbreviated as CD which can be confusing as D could have been deployment too! So always clarify what people mean when they just say CD, unless they were talking about Compact Discs.

Continuous delivery is the extension of continuous integration. This is where the software is packaged and an artifact is delivered to end-users.

Now this definition often gets mixed up with deployment even on the websites of large so I am pretty sure someone is going to challenge the definition which I accept as sensible. So let us take a look at that next.

Continuous Deployment

The next step is the dream for most companies. The idea of automatically deploying the results of the continuous delivery stage to the target environment. Automatically in bold because, that is continuous deployment. If you have a human pressing buttons to initiate this, then you have not attained this final stage of amaze-balls DevOps practice!

Why is it not a reality for many yet?

The question of rolling back something that has been deployed. This may not be a trivial thing to do. If you have the infrastructure to deploy after a set of great automated tests, then there must be talks about how to undo those deployments when the tests fail. In fact, the development team has to decide on strategies for deployment to make this a reality.

Anything automated also needs, continuous real-time monitoring and alerting, to let those involved know of any problems as soon as possible. Automated health-checks on dependencies are some of the most common things that applications do on deployment.

It is also hard to ensure that applications deployed on one environment will work the same way in another environment. This is another obstacle to continuous deployment. I read that a lot of teams use containerisation to solve this problem.

Also, if you haven’t yet done continuous integration and delivery then there is no question of continuous deployment. This is the last mile in the race.

How to adopt all the continuous I and D’s

The journey to continuous integration has to start with educating the development teams on the value of automated unit and integration tests. Then there are many other things:

- Good version control management system - Git is my favourite

- Build and deploy pipelines as code - azure devops?

- Infrastructure as code - Terraform (the most popular), Chef, Puppet, Ansible, AWS cloud formation, Azure resource manager, Google Cloud Deployment manager and so on…

- Containerisation - Docker, Marathon (Apache Mesos framework), CoreOS, Docker Swarm, Packer etc.

- Feature flags - releasing features to production using flags that can be toggled on or off using an API.

- Trunk based development

What about DevSecOps?

Idea is to ensure there are tests to ensure security too. There are tools to do static and dynamic code analysis and security testing.

Continuous deployment strategies

There are so many ways to deploy your software today. Thanks to the wide range of tools available to execute the many strategies.

Rolling Update

So you have a cluster of servers behind a load balancer. Let us call them A, B and C. Such creative names! Much wow!

Let us just say that you need to roll version 2 of your software to this cluster using this strategy, also known as incremental rollout.

Take A off the pool from the load balancer, deploy changes to A. Put A back in the pool.

Repeat the process for B and then C.

Now A, B and C all have the latest version of the application!

Easy to understand and setup. However, it can be slow and tricky to roll back. And at least until you deploy the version to all servers, there will be two different versions being served to clients at the same time for a short period.

Blue/Green deployment

So in order to make it easier to explain, let us keep the number of servers and their names the same. However, in this strategy, you will have as many new servers as there are existing servers. Oops, that sounds confusing. So let us say, you have A, B and C as Green cluster that are serving version 1 of the software. In order to release version 2, you would deploy your software to D, E and F servers which we can call as Blue cluster. Then run some fantastic automated tests against this cluster. If all looks good, then switch traffic from the load balancer to the new Blue cluster thereby making Green cluster ready for development use!

The key difference between this strategy and the previous is that you are deploying changes to new set of servers and then switching traffic at once after some tests are carried out.

A/B testing

Is this even a deployment strategy?

Well, you maybe right about that. A/B testing, is called so because the name states A and B as two groups and in this context, it is two different version of the software. Compared to Canary deployment, where there is a gradual rollout to a larger subset, A/B test strategy, means a particular subset of traffic is directed to the new version. This subset is based on some arbitrary criteria like maybe query params from the client, particular location, platform - mobile or tablet, language, etc. This subset is served the new version and constantly monitored for certain key metrics.

Personally, I consider this technique as the software equivalent of a randomized controlled trial in medical science. Although in medical science, it is randomized, here it is a well defined group. Results are analysed and decision is made whether to roll the feature out to the wider group. There by redirecting all traffic from V1 to V2.

Canary deployment

If you are like me, you might be wondering, how did it get its name?

Canaries were once used in coal mines to detect toxic-gases. No they didn’t have extrasensory perception. They are just more sensitive to toxic gases than humans and people cared less about a bird’s death than about a human’s. So if the canary fell ill or died, it was a good sign that humans could not work there.

So in the deployment world, this name is used as a way to say - deploy code to a subset of the end-users before giving it to every one.

Using the same set of servers as we used in the earlier explanation.

Version 1 is live on A, B and C. Now we have deployed to D, E and F. But we gradually route a small subset of traffic to the new cluster, continue monitoring and decide if we do a full rollout or a rollback. This gives you fine grain control of whether to go ahead with the rollout or not. But certainly needs good monitoring and alerting.

Shadowing

The name has the clue. The strategy implies one cluster shadowing the moves of another.

For example if Green Cluster is LIVE with V1. We have now deployed V2 to Blue cluster.

All traffic going to Green cluster, is also directed to Blue cluster in such a way that it does not directly serve clients but for simply testing with production traffic.

This is an expensive way to test out new code. But pretty good one to check if there are anomalies.

Summary

Continuous integration, delivery and deployment, will improve your confidence in delivering software and will thereby improve the speed at which a change can get from dev to production. The earlier you invest in thinking and implementing measures to make this a reality, the better it will be for your team and organisation.

References

- Advanced Deployment Strategies

- Intro to Deployment Strategies

- Six strategies for application deployment

- Kube deployment strategies

- Continuous Integration - Martin Fowler

- Continuous Integration - Wikipedia

- Continuous Integration - Atlassian

- Continuous Integration - Microsoft docs

- Continuous Integration - IBM Guide

- Continuous Integration - Thoughtworks