Background

Those new to the software industry might not even have heard of containers.

When I ask about it to those who brag about it, comparisons are made to Virtual machines but I never really got the details out from anyone, or you can say, I was not satisfied with explanations.

So I thought I would go on a journey to find out more about it myself and then condense what I learned and write it in the perspective of someone looking to understand what the hype was all about.

Let us familiarise ourselves with the terminology first.

Terms or Jargons or Names or those sort of things

What is a container?

A container is a packaged unit of software.

You mean like a nuget package? or an npm package?

A little bit like that. But maybe more.

A container is a packaged unit of software that has all the dependencies to run the application reliably and quickly from one computing environment to another

So unlike a nuget or npm package which is just a library or a framework, a container is something that has the whole executable application that can work on any machine, apparently!

A container relies on virtualising the operating system and not the physical hardware. Hence use fewer resources than a virtual machine

Containers are build to be deployed to a particular operating system. Thus to run a container built for Linux, you need a Linux virtual machine or a real machine.

What is Docker?

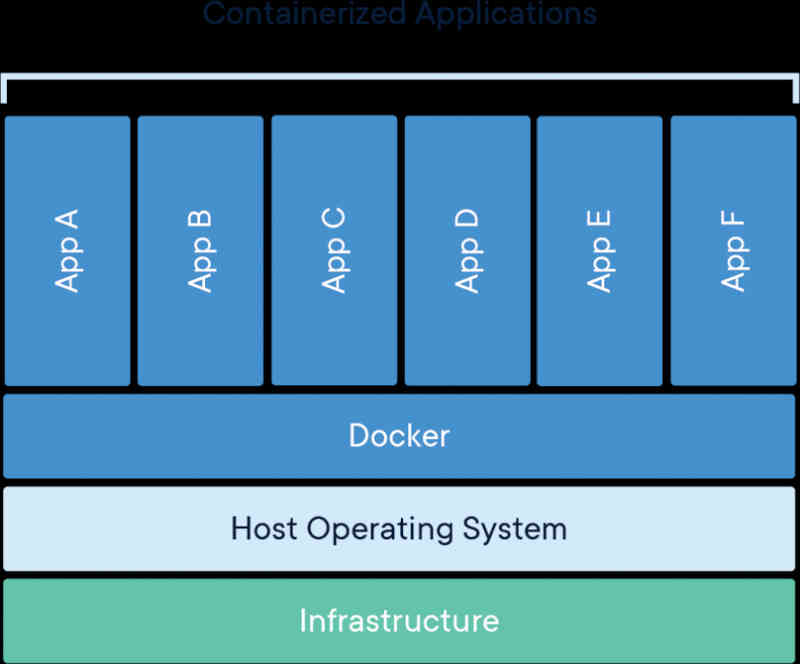

Docker is a suite of platform as a service products that use virtualisation at the operating system level to deliver software in packages that are called containers.

Such Docker containers are isolated from one another, and can be deployed in the same host or different hosts and can communicate with one another too.

Docker builds an image - your project code and its dependencies. This image can be run as multiple containers in any machine that has docker installed on it.

Docker sits on top of the host operating system and is able to run multiple images in individual container processes that can work as if they are running on their own machine.

What was most confusing for me was why was Docker (container engine) even necessary. Why could we not have done what we do with Docker, in virtual machines?

What is a virtual machine?

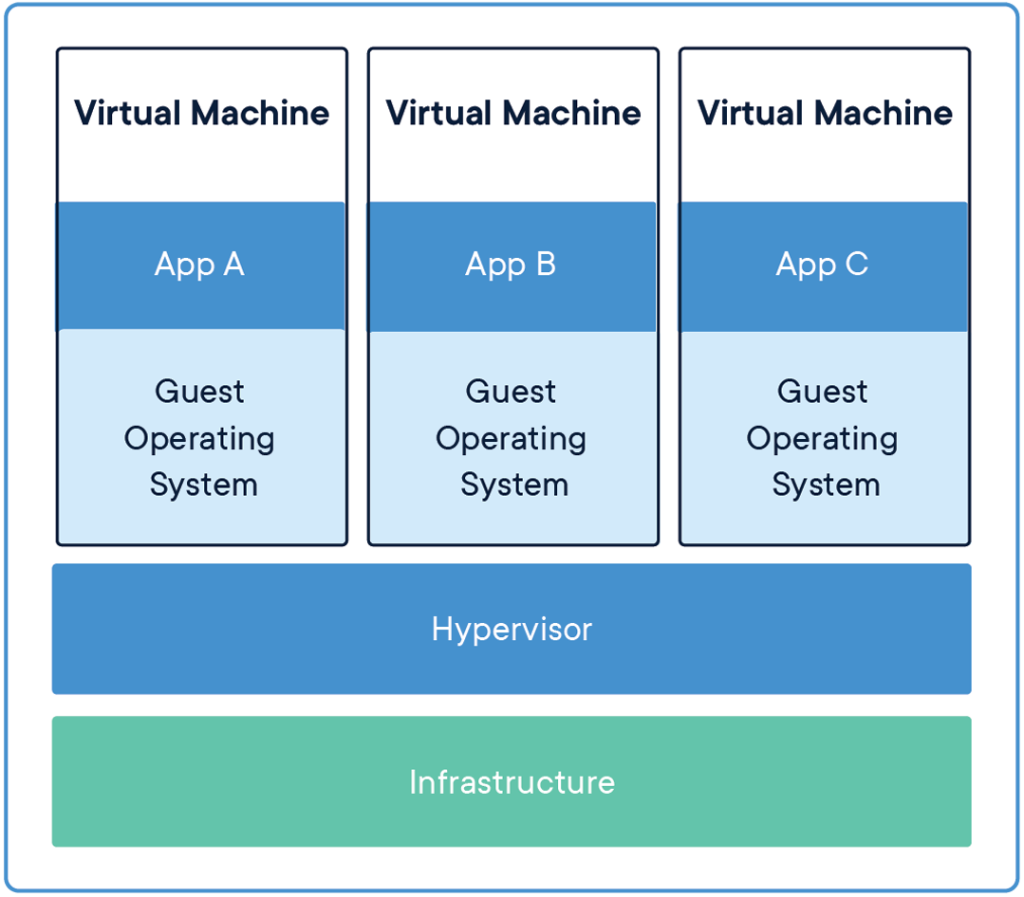

A virtual machine is a compute resource that uses software instead of a physical computer to run programs and deploy apps. There maybe more than one virtual machines, aka guest, running on an actual physical machine, aka host.

Every virtual machine runs its own operating system and functions separately from the other virtual machines, despite running on the same host machine. Sounds pretty cool huh?

Well, this technology allows me to run my own ubuntu system on my PC.

In my previous firm, we used to spawn virtual machines to host a group of SQL Server instances for different environments. We used to isolate applications by virtual machines, to avoid development apps from affecting production apps and vice versa. It provides a fully isolated virtual environment.

The beauty of a virtual machine is that software running inside the virtual machine cannot tamper with the host machine. Also cannot tamper with one another. As long as you have the memory, processing and storage capacity to be able to host a certain number of virtual machines, you could exploit the same physical resources for multiple virtual instances, saving you money that you would otherwise have had to spend in buying entirely additional hardware.

Virtual machines on a hardware - Hypervisor (virtual machine runner/monitor) is key. And notice how the app, the OS and other dependencies are all packaged into a virtual machine.

What is a hypervisor?

This is probably the coolest technology that made it all possible in the first place. Hypervisor is actually a Virtual Machine Monitor, or affectionately (just kidding) called Virtualiser. This is the secret recipe of every cloud platform.

It is kind of an emulator - hardware or software that lets one computer system to behave like another.

The hypervisor runs any number of virtual machines on host, as explained earlier, the physical machine. It provides the guest operating systems with a virtual operating platform and manages execution of the guest operating systems.

Apparently origins of this technology dates back to 1967!

Comparison a container with a virtual machine

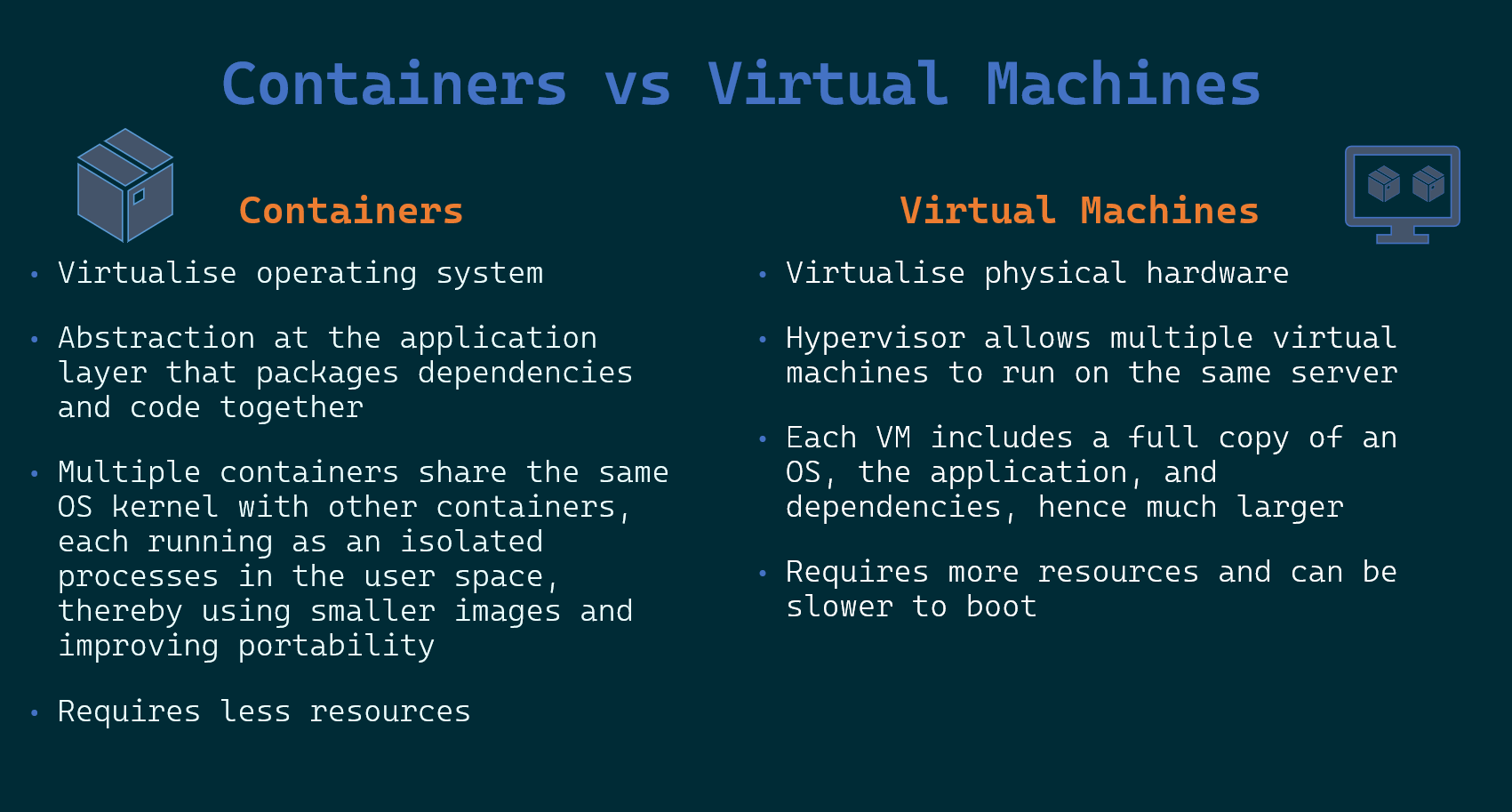

Containers and virtual machines have similar resource isolation and allocation benefits but function differently because containers virtualize the operating system instead of the hardware. Wheras Virtual machines, are literally a virtual version of a computer. Let us do a comparison.

I hope the comparison above helped you understand the key differences.

And this mean, when used together they can provide a great deal of flexibility for deploying and managing applications.

How did Docker become such a big deal?

Docker launched and developed a Linux container technology that was pretty good from the start - portable and flexible.

They then open-sourced the container libraries and partnered with the community of contributors to make it better. Docker donated the container image specification and the related code to the Open Container Initiative to establish standardization in the container ecosystem.

Through the containerd project they gave back to the Cloud Native Computing Foundation. It is now an industry standard container runtime that leverages runc - a command line interface tool to spawn and run containers.

The emphasis on ease of use, speed to get up and running, isolation and modularity (also comes with the scalability factor) made docker such a great deal.

Like every cool runtime environment, Docker has a place where you can share your images publicly with others who might need it. Like github, but dockerhub. These images can be retrieved from anywhere and executed anywhere.

When would I need docker?

There are plenty of scenarios where you want to run applications developed by multiple teams, in slightly different variations of a certain application runtime.

In these cases, containerisation would save a lot of resources. Imagine having to run an app that needs JRE 8 and another that runs on JRE7. With containerisation, you could create two images with its individual dependencies and run them side by side on the same machine!

Docker can be used to create a deployable unit that goes through a continuous integration and deployment pipeline. There are even tools that enable this called Drone or Shippable.

A docker image can be used to run an application locally, as if it were running in production. How many times did you wish for that when there was a bug in production? Of course, you need the physical resources to run that image on your machine.

As mentioned earlier, you can create images and share it via dockerhub to dockerhub collaborate with others! In this way, docker acts like Github but for container images. You get to version the images, make changes and track history of changes made to the image.

How does docker do this?

Docker Engine, is the key to dockers containers. It is the defacto runtime that runs on several different operating systems that enable running the docker images that you build to run on any host as a containerised application.

When would I need a virtual machine?

Whenever there is a need to simulate the multiple physical systems, you could consider virtual machines instead.

Hardware maintenance can be hard. Even acquiring the right hardware takes time. Back in the day, I remember, having to place orders before a quarter-end so that the stuff we needed got allocated before the end of the subsequent quarter. Some organisations that operate this way, might actually benefit a lot from virtualisation, by speeding up such allocations. Although, I doubt this is the case today.

Adding to the earlier point, consolidating management of infrastructure all into virtual machine level, means you sometimes get tools with the virtual machine provider that helps you manage all your applications. VMWare has some cool dashboards for managing applications on a virtual machine.

You could use a virtual machine to try or learn a new operating system. I did this, where I installed Ubuntu and Debian as different virtual machines on my workstation to try out the differences. You could even run Windows 98 in a virtual machine, to give you those 90s feels.

If you were a developer thinking of developing applications for platforms that you do not generally use, you could create a virtual machine on your workstation for this purpose with the target platform installed. Although there are times when real hardware replacement is not possible, Virtual Machines do open a lot of doors.

If you are a researcher on malware, you could create virtual machines to tinker with malware in it, safely on your workstation. As the Virtual Machine isolates the entire environment for that malware, your machine will stay intact. Similarly, if you want to try out suspicious software, you could spin off a virtual machine and try it out there before installing it on your primary workstation!

I still have my Ubuntu images in my external hdd, which I can copy to my current workstation and start a virtual machine, as if I am switching on that machine after all this while!

Summary

Every tool has its use. Use the one that serves your purpose. That’s the way to go. I hope all this text here helped you understand the concepts better.